Pose Estimation as a Measure of Behaviour

Behavior, with all its complexity and intricacy, is governed by the activity of neurons in the brain. In order to systematically study behavior and connect it to brain mechanisms, we need to reduce it down to measurable metrics. Pose estimation is a technique used to track the location of behaving subjects along with their body parts in time. For example, having a video of a tennis player, pose estimation would give the x, y coordinates of key points associated to desired body parts (like the hands, legs, or the whole body) in each frame of the video. One popular choice for placement of key points is on the joints of the body. If you want to track the arms for example, you could put three key points, one on the wrist, one on the elbow, and of on the shoulder. Traditionally, this was mostly done by placing reflective markers (physical key points) on the body of the subject that could then later be accurately identified through software (other markerless computer vision methods with hand-crafted features were also available but were difficult to work with).

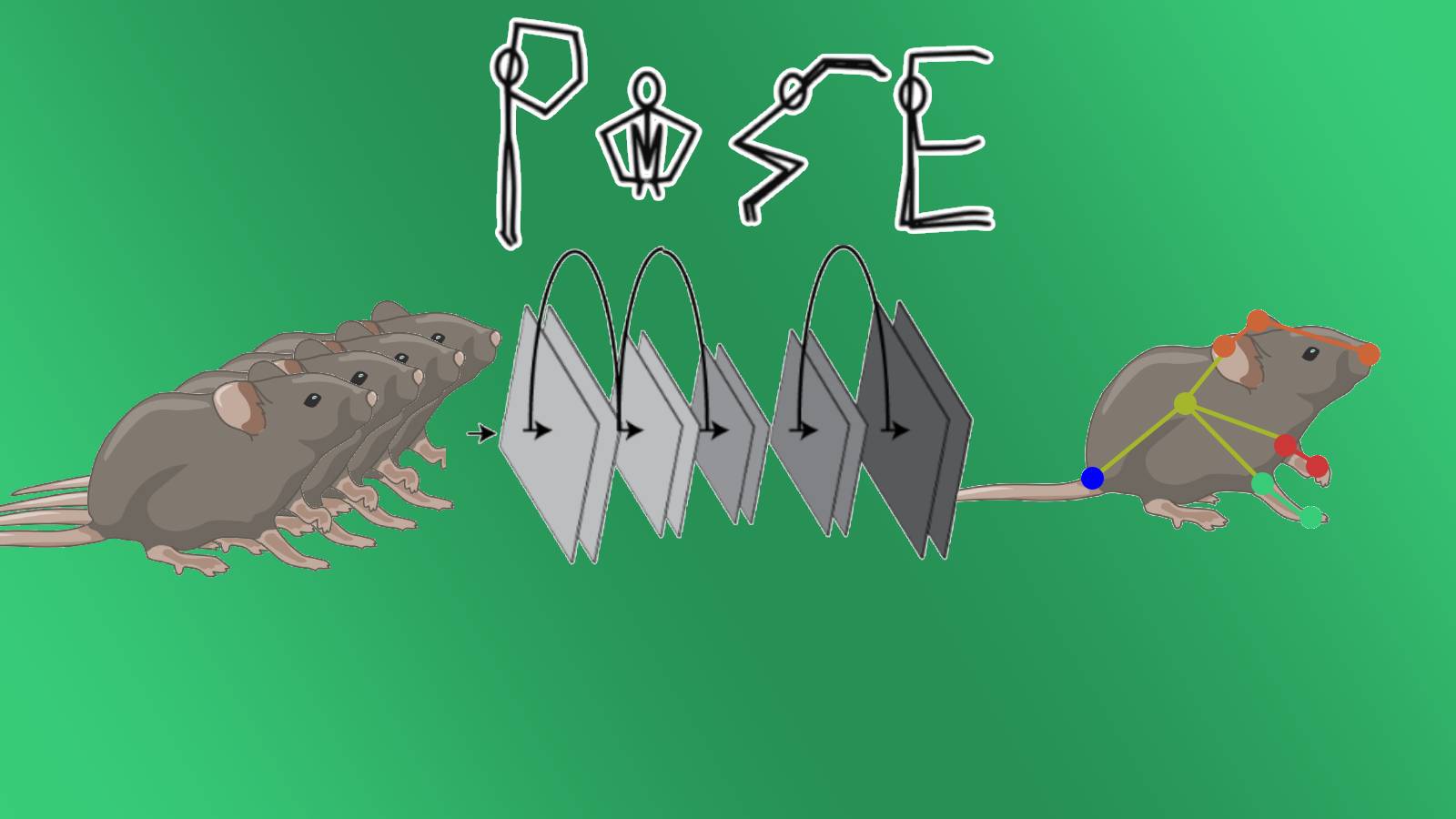

However, this approach is generally expensive and especially in the case of animals, not feasible due to their natural behavior or size. Moreover, manual key point tracking and annotation of animal data is a very labor-intensive process. With the advent of deep learning, there was a boom of new approaches that allowed for robust and efficient pose estimation without the need for markers. Deep neural networks (DNNs) are special types of algorithms that can learn from data. They are built by connecting simple units together in layers to form complex networks. As they are trained on large amounts of information, these connections learn to extract important details and solve problems like recognizing objects in images [7].

In order for these deep networks to learn where to place the key points in each frame of a video, they need to be trained on extremely large amounts of previously annotated datasets. Gathering and annotating this much data is not feasible for most laboratory experiments. In their initial paper, Mathis et al. [5] introduced a method and software package called DeepLabCut (DLC) that allows high quality markerless pose estimation in a way that is computationally efficient, making it accessible to the vast majority of labs. They used an approach known as transfer learning where you take a deep network that was trained on a large amount of data for one task and utilize it on another. Building on top of a network that was pre-trained on a large dataset for object recognition, they showed that the accuracy of their model, trained on as few as 200 manually annotated frames, is on par with human performance in a variety of pose estimation tasks with different animals. The more diverse the annotated frames (i.e., different subjects, camera position, lighting, etc.) the more robust the model is going to be to perturbations [4]. If the model happens to have poor performance on some video later down the line, then DLC allows the user to extract those frame, manually label them, and feed them back to the model for further fine-tuning. This accumulative process allows experimenters to create a robust model during the course of long experiments [9].

Even though this approach is very data efficient, users still have to manually label frames and retrain the model. That is where SuperAnimal models, as introduced in a recent preprint by the team behind DLC, come in [11]. These models merge all the pre-trained pose models on different animals and behavioral contexts together to reduce the need for individual model development. This approach not only saves time and resources but also enables researchers to adapt existing models to new scenarios with minimal additional effort. The DeepLabCut Model Zoo [6] is a web platform that facilitates this collective model building effort. It allows users to access pre-trained pose models, collect and label more data, and share their own models with the global community.

Software developed in academia has always been notorious for its extremely low maintainability, readability, and re-usability. The spaghetti code that is served and put on online repositories is hardly ever used by others after the publication of the paper. Many principal investigators would rather offload software development, often considered as a low status job, to interns and inexperienced members as opposed to spending their hard-earned grant money on professional software engineers. [1] There is, however, a growing call for appreciating and rewarding software development and maintenance in academia [8]. The code for DeepLabCut is fully open source on GitHub and utilizes some of the best software engineering practices such as version control, testing, automated builds, containerization, and packaging. Moreover, there is excellent documentation on how to install and use the software, along with video tutorials and examples. With these, DLC has managed to garner more than four thousand stars on GitHub and three thousand citations on the original paper. In fact, DLC has one of the most active communities on Scientific Community Image Forum [10], a discussion forum for scientific image software, where often times you can see people from the DLC team answer users’ questions and issues.

Conclusion

It is still very challenging to deal with scenes with multiple subjects where from time to time individuals may cover each other and cause occlusions. This also complicates associating key points to the similarly looking animals after they interact with each other. Recent additions to DLC [3], alleviate this problem by using spatial and temporal relationships between the key points to track the identity of animals through time. Depending on how robust the network needs to be, researchers still have to label hundreds of images manually. With advances in point tracking in videos through machine learning [2], it may be possible to create a computer-assisted labeling interface where the user labels the first frame and the machine learning model labels the rest of the frames to the best of its abilities, after which the user can adjust the labels on bad frames and let the model re-track from the adjusted frame. This could potentially reduce the number of manually labeled images by a large factor.

The wide range adaptation of tools like DLC among different scientific communities from neuroscience to rehabilitation outlines the importance of measuring behavior through pose estimation. The incredible advances in machine learning may provide even better tools for quantification of behavior and practical use in the laboratories.

Refrences

- [1] Derek, J. Research software code is likely to remain a tangled mess. https://shape-of-code.coding-guidelines.com/2021/02/21/research-software-code-is-likely-to-remain-a-tangled-mess/ (2021).

- [2] Karaev, N. et al. CoTracker: It is Better to Track Together. http://arxiv.org/abs/2307.07635 (2023) doi:10.48550/arXiv.2307.07635.

- [3] Lauer, J. et al. Multi-animal pose estimation, identification and tracking with DeepLab-Cut. Nat Methods 19, 496–504 (2022).

- [4] Mathis, A. et al. Pretraining Boosts Out-of-Domain Robustness for Pose Estimation. in 1859–1868 (2021).

- [5] Mathis, A. et al. DeepLabCut: Markerless pose estimation of user-defined body parts with deep learning. Nat Neurosci 21, 1281–1289 (2018).

- [6] Mathis, M. W. DeepLabCut Model Zoo! http://www.mackenziemathislab.org/dlc-modelzoo.

- [7] Mathis, M. W. & Mathis, A. Deep learning tools for the measurement of animal behavior in neuroscience. Current Opinion in Neurobiology 60, 1–11 (2020).

- [8] Merow, C. et al. Better incentives are needed to reward academic software development. Nat Ecol Evol 7, 626–627 (2023).

- [9] Nath, T. et al. Using DeepLabCut for 3D markerless pose estimation across species and behaviors. Nat Protoc 14, 2152–2176 (2019).

- [10] Rueden, C. T. et al. Scientific Community Image Forum: A discussion forum for scientific image software. PLOS Biology 17, e3000340 (2019).

- [11] Ye, S. et al. SuperAnimal pretrained pose estimation models for behavioral analysis. http://arxiv.org/abs/2203.07436 (2023) doi:10.48550/arXiv.2203.07436.